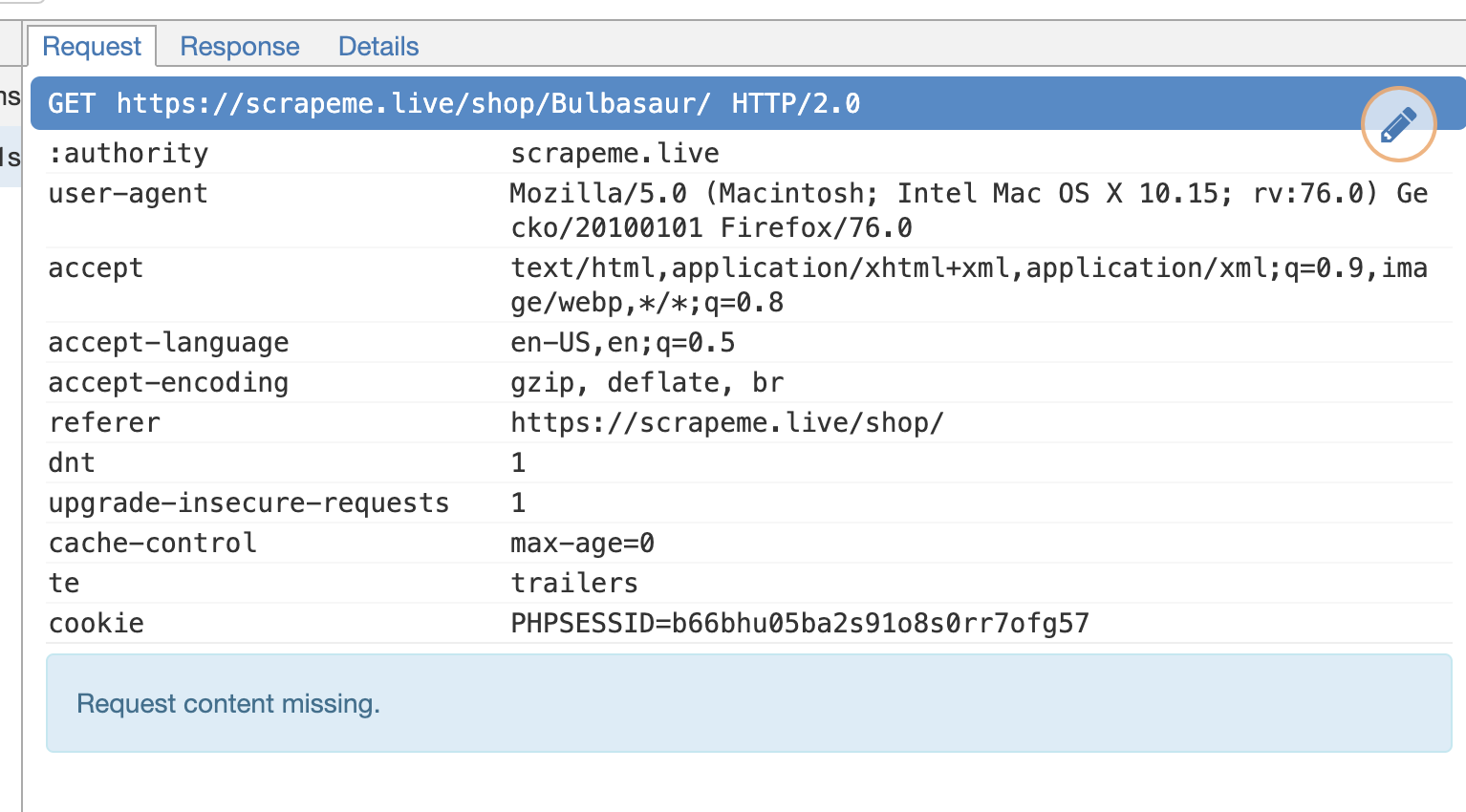

The scraping and the parsing will both be handled by separate Python scripts. Finally, we can parse the data to find relevant information. The data is then stored in a format we can use. Next, we use a program we create in Python to scrape/collect the data we want. If you click on the three vertical dots in the upper right corner of the browser, and then ‘More Tools’ option, and then ‘Developer Tools’, you will see a panel that pops up which looks like the following:įirst, there’s the raw HTML data that’s out there on the web. Other browsers like Firefox and Internet explorer also have developer tools, but for this example, I’ll be using Chrome. If you’re unfamiliar with the structure of HTML, a great place to start is by opening up Chrome developer tools.

#IGNORE IF WEBSITE HAS ERROR WEBSCRAPER FREE#

If you’re already familiar with any of these, feel free to skip ahead.

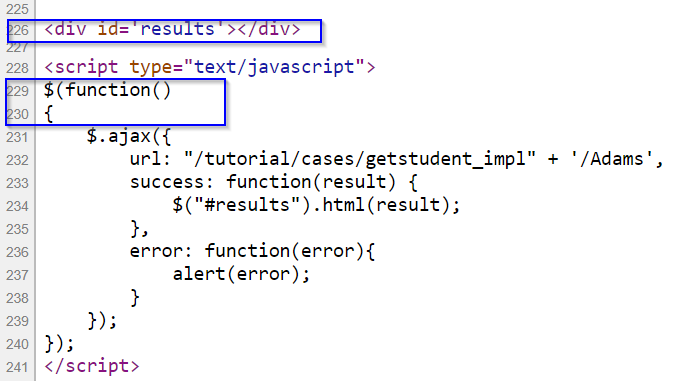

The basic idea of web scraping is that we are taking existing HTML data, using a web scraper to identify the data, and convert it into a useful format. Or, we could filter some of the results here beforehand. Still looking at our first example, we might be interested in only collecting tweets that mention a certain word or topic, like “Governor.” It might be easier to collect a larger group of tweets and parse them later on the back end. Or, we could further filter the scrape, but specifying that we only want to scrape the tweets if it contains certain content.

#IGNORE IF WEBSITE HAS ERROR WEBSCRAPER HOW TO#

Knowing how to identify where the information on the page is takes a little research before we build the scraper.Īt this point, we could build a scraper that would collect all the tweets on a page. Most of the actual tweets would probably be in a paragraph tag, or have a specific class or other identifying feature.

In the above example, we can see that we might have a lot of information we wouldn’t want to scrape, such as the header, the logo, navigation links, etc. Secondly, a web scraper would need to know which tags to look for the information we want to scrape. At the bare minimum, each web scraping project would need to have a URL to scrape from. As you might imagine, the data that we gather from a web scraper would largely be decided by the parameters we give the program when we build it. We might limit the gathered data to tweets about a specific topic, or by a specific author. # Opens the Twitter web page and stores some content.ĭocumentation Opens the Twitter web page and stores some content.In the above example, we might use a web scraper to gather data from Twitter.

0 kommentar(er)

0 kommentar(er)